3.4实训任务 Hadoop环境搭建与安装_hadoop安装并上传文件-程序员宅基地

一、官网下载Hadoop

Apache Hadoop![]() https://hadoop.apache.org/releases.html

https://hadoop.apache.org/releases.html

Windows在Apache官网下载需要版本的Binary文件,再通过XFTP传输到CentOS虚拟机。

二、传输Hadoop

0、在xshell中输入ls命令查看文件目录

1、在Xshell中点击xftp

2、找到下载下来的Hadoop···.tar.gz文件,双击该文件开始上传

3、传输完成

4、再在xshell中输入ls查看文件目录,看是否上传成功

5、在XFTP中新建Opt文件夹

6、把Hadoop···.tar.gz文件移动到Opt文件夹中

7、解压Hadoop

进入Xshell中

①进入hadoop-2.10.1.tar.gz所在文件位置

cd /home/bigdata/Opt②解压hadoop-2.10.1.tar.gz到当前文件目录

tar -zxvf hadoop-2.10.1.tar.gz

解压后的文件目录:

三、配置Hadoop

首先,由于Hadoop是Java进程,所以需要添加JDK。配置Hadoop前要先安装JDK,

JDK的下载和安装以及配置可以参考此文:

Linux中安装JDK_懒笑翻的博客-程序员宅基地

配置SSH无密码登录可以参考此文:

1、Hadoop伪分布式安装,先找到配置文件路径

2、修改 core-site.xml 配置文件

输入命令:

vim /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop/core-site.xml回车后,点击键盘 i 即可进入输入模式

在core-site.xml 中增加配置信息:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.232.131:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/bigdata/Opt/hadoop-2.10.1/tmp</value>

</property>

</configuration>

需要注意的是配置文件中原本就有<configuration></configuration>标签,把其他配置信息放在里面就行了。

按 ESC 退出编辑后,输入 :wq 保存并退出core-site.xml

3、修改 hadoop-env.sh 文件配置Hadoop运行环境,用来定义Java环境变量。

输入命令:

vim /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop/hadoop-env.sh输入下图中配置信息【路径和文件名对应你自己的哈】:

配置信息输入完后,按ESC键,然后 :wq 保存并退出

配置信息输入完后,按ESC键,然后 :wq 保存并退出

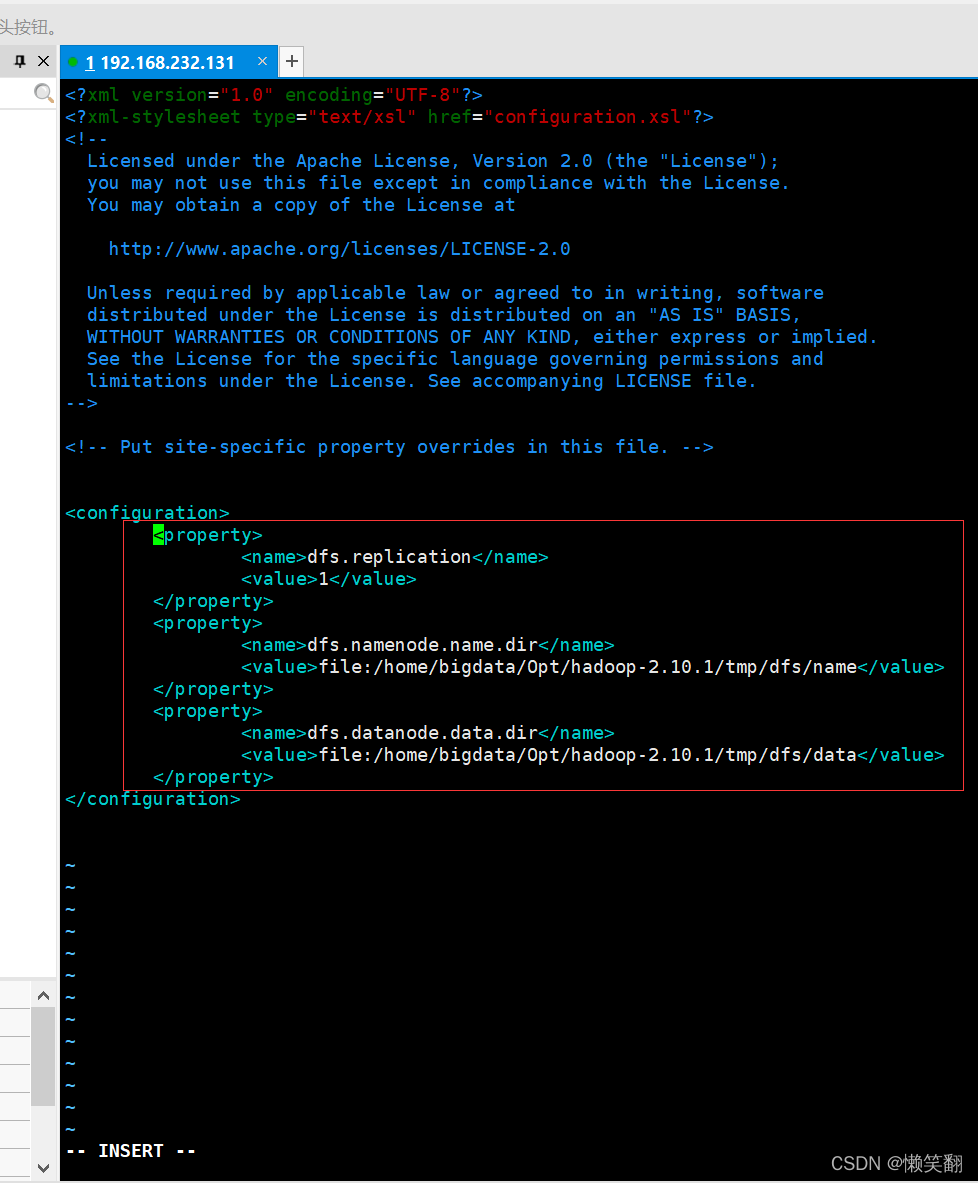

4、修改hdfs-site.xml文件来配置HDFS

vim /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop/hdfs-site.xml<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/bigdata/Opt/hadoop-2.10.1/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/bigdata/Opt/hadoop-2.10.1/tmp/dfs/data</value>

</property>

</configuration>

按 ESC 退出编辑后,输入 :wq 保存并退出

5、修改配置 mapred-site.xml 文件来配置MapReduce 参数

①由于 /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop 目录下只有/mapred-site.xml.template文件

② 下面我们 复制 mapred-site.xml.template 文件生成mapred-site.xml 文件

输入命令:

scp mapred-site.xml.template mapred-site.xml

③修改 mapred-site.xml 配置信息,指明Hadoop的MR将来运行于YARN资源调度系统上

vim /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop/mapred-site.xml<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

按 ESC 退出编辑后,输入 :wq 保存并退出

6、配置 yarn-site.xml 文件,用于配置集群资源管理系统参数

vim /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop/yarn-site.xml<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.232.131</value>

</property>

<property>

<name>yarn.nodemanager.aux-service</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

按 ESC 退出编辑后,输入 :wq 保存并退出

7、配置Hadoop环境变量

需要先使用root权限,使用root登录

su root输入命令:

vim /etc/profile

#set hadoop enviroment

export HADOOP_HOME=/home/bigdata/Opt/hadoop-2.10.1

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

按 ESC 退出编辑后,输入 :wq 保存并退出

8、刷新配置

输入命令:

source /etc/profile9、配置完成后,执行 NameNode 的格式化

进入 /home/bigdata/Opt/hadoop-2.10.1/sbin 目录

cd /home/bigdata/Opt/hadoop-2.10.1/sbin执行命令:

hdfs namenode -format

[root@localhost hadoop]# cd /home/bigdata/Opt/hadoop-2.10.1/sbin

[root@localhost sbin]# hdfs namenode -format

22/04/24 10:03:35 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.10.1

STARTUP_MSG: classpath = /home/bigdata/Opt/hadoop-2.10.1/etc/hadoop:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-collections-3.2.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/servlet-api-2.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jetty-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jetty-util-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jsp-api-2.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jersey-core-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jersey-json-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jettison-1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/activation-1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jersey-server-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/asm-3.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/log4j-1.2.17.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-lang-2.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-digester-1.8.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-lang3-3.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/avro-1.7.7.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/paranamer-2.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/snappy-java-1.0.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-compress-1.19.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/gson-2.2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/hadoop-auth-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/nimbus-jose-jwt-7.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/zookeeper-3.4.14.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/spotbugs-annotations-3.1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/netty-3.10.6.Final.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/curator-framework-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/curator-client-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jsch-0.1.55.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/stax2-api-3.1.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/junit-4.11.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/hamcrest-core-1.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/hadoop-annotations-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/guava-11.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/jsr305-3.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-cli-1.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/xmlenc-0.52.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/httpclient-4.5.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/httpcore-4.4.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-codec-1.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-io-2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/commons-net-3.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/hadoop-common-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/hadoop-common-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/hadoop-nfs-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/asm-3.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/hadoop-hdfs-client-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/okio-1.6.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/netty-all-4.1.50.Final.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/xercesImpl-2.12.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/xml-apis-1.4.01.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jackson-databind-2.9.10.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jackson-annotations-2.9.10.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/lib/jackson-core-2.9.10.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-client-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-client-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-native-client-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-native-client-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-rbf-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-rbf-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/hdfs/hadoop-hdfs-nfs-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-compress-1.19.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/guice-3.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/javax.inject-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/asm-3.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jettison-1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-math3-3.1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/xmlenc-0.52.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/httpclient-4.5.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/httpcore-4.4.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-net-3.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jetty-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jetty-sslengine-6.1.26.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jsp-api-2.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jets3t-0.9.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/java-xmlbuilder-0.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-configuration-1.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-digester-1.8.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-beanutils-1.9.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-lang3-3.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/avro-1.7.7.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/paranamer-2.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/snappy-java-1.0.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/gson-2.2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/nimbus-jose-jwt-7.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jcip-annotations-1.0-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/json-smart-1.3.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/apacheds-i18n-2.0.0-M15.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/api-asn1-api-1.0.0-M20.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/api-util-1.0.0-M20.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/zookeeper-3.4.14.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/spotbugs-annotations-3.1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/audience-annotations-0.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/netty-3.10.6.Final.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/curator-framework-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/curator-client-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jsch-0.1.55.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/curator-recipes-2.13.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/htrace-core4-4.1.0-incubating.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/stax2-api-3.1.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/woodstox-core-5.0.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/metrics-core-3.0.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/fst-2.50.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/java-util-1.9.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/json-io-2.5.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jsr305-3.0.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/activation-1.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-api-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-common-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-registry-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-common-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-tests-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-client-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-server-router-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/avro-1.7.7.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/snappy-java-1.0.5.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/commons-compress-1.19.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/hadoop-annotations-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/netty-3.10.6.Final.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.1.jar:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.10.1-tests.jar:/home/bigdata/Opt/hadoop-2.10.1/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://github.com/apache/hadoop -r 1827467c9a56f133025f28557bfc2c562d78e816; compiled by 'centos' on 2020-09-14T13:17Z

STARTUP_MSG: java = 1.8.0_331

************************************************************/

22/04/24 10:03:35 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

22/04/24 10:03:35 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-b591d3f7-fbc8-4c53-84df-5b3722ef0456

22/04/24 10:03:36 INFO namenode.FSEditLog: Edit logging is async:true

22/04/24 10:03:36 INFO namenode.FSNamesystem: KeyProvider: null

22/04/24 10:03:36 INFO namenode.FSNamesystem: fsLock is fair: true

22/04/24 10:03:36 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

22/04/24 10:03:36 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

22/04/24 10:03:36 INFO namenode.FSNamesystem: supergroup = supergroup

22/04/24 10:03:36 INFO namenode.FSNamesystem: isPermissionEnabled = true

22/04/24 10:03:36 INFO namenode.FSNamesystem: HA Enabled: false

22/04/24 10:03:36 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

22/04/24 10:03:36 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

22/04/24 10:03:36 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

22/04/24 10:03:36 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

22/04/24 10:03:36 INFO blockmanagement.BlockManager: The block deletion will start around 2022 Apr 24 10:03:36

22/04/24 10:03:36 INFO util.GSet: Computing capacity for map BlocksMap

22/04/24 10:03:36 INFO util.GSet: VM type = 64-bit

22/04/24 10:03:36 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

22/04/24 10:03:36 INFO util.GSet: capacity = 2^21 = 2097152 entries

22/04/24 10:03:36 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

22/04/24 10:03:36 WARN conf.Configuration: No unit for dfs.heartbeat.interval(3) assuming SECONDS

22/04/24 10:03:36 WARN conf.Configuration: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

22/04/24 10:03:36 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

22/04/24 10:03:36 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

22/04/24 10:03:36 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

22/04/24 10:03:36 INFO blockmanagement.BlockManager: defaultReplication = 1

22/04/24 10:03:36 INFO blockmanagement.BlockManager: maxReplication = 512

22/04/24 10:03:36 INFO blockmanagement.BlockManager: minReplication = 1

22/04/24 10:03:36 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

22/04/24 10:03:36 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

22/04/24 10:03:36 INFO blockmanagement.BlockManager: encryptDataTransfer = false

22/04/24 10:03:36 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

22/04/24 10:03:36 INFO namenode.FSNamesystem: Append Enabled: true

22/04/24 10:03:36 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

22/04/24 10:03:36 INFO util.GSet: Computing capacity for map INodeMap

22/04/24 10:03:36 INFO util.GSet: VM type = 64-bit

22/04/24 10:03:36 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

22/04/24 10:03:36 INFO util.GSet: capacity = 2^20 = 1048576 entries

22/04/24 10:03:36 INFO namenode.FSDirectory: ACLs enabled? false

22/04/24 10:03:36 INFO namenode.FSDirectory: XAttrs enabled? true

22/04/24 10:03:36 INFO namenode.NameNode: Caching file names occurring more than 10 times

22/04/24 10:03:36 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: falseskipCaptureAccessTimeOnlyChange: false

22/04/24 10:03:36 INFO util.GSet: Computing capacity for map cachedBlocks

22/04/24 10:03:36 INFO util.GSet: VM type = 64-bit

22/04/24 10:03:36 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

22/04/24 10:03:36 INFO util.GSet: capacity = 2^18 = 262144 entries

22/04/24 10:03:36 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

22/04/24 10:03:36 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

22/04/24 10:03:36 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

22/04/24 10:03:36 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

22/04/24 10:03:36 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

22/04/24 10:03:36 INFO util.GSet: Computing capacity for map NameNodeRetryCache

22/04/24 10:03:36 INFO util.GSet: VM type = 64-bit

22/04/24 10:03:36 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

22/04/24 10:03:36 INFO util.GSet: capacity = 2^15 = 32768 entries

22/04/24 10:03:36 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1974055419-127.0.0.1-1650819816481

22/04/24 10:03:36 INFO common.Storage: Storage directory /home/bigdata/Opt/hadoop-2.10.1/tmp/dfs/name has been successfully formatted.

22/04/24 10:03:36 INFO namenode.FSImageFormatProtobuf: Saving image file /home/bigdata/Opt/hadoop-2.10.1/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

22/04/24 10:03:36 INFO namenode.FSImageFormatProtobuf: Image file /home/bigdata/Opt/hadoop-2.10.1/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 seconds .

22/04/24 10:03:36 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

22/04/24 10:03:36 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown.

22/04/24 10:03:36 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

10、开启所有服务

进入 /home/bigdata/Opt/hadoop-2.10.1/sbin 目录

cd /home/bigdata/Opt/hadoop-2.10.1/sbin执行命令:

./start-all.sh

通过 jps 命令来查看所有服务是否启动

11、开启NameNode和DataNode

进入 /home/bigdata/Opt/hadoop-2.10.1/sbin 目录

cd /home/bigdata/Opt/hadoop-2.10.1/sbin执行命令:

./start-dfs.sh

12、Hadoop中HDFS还提供Web访问页面,默认端口 50070,通过HTTP协议访问

13、Hadoop集群信息也提供Web访问页面,默认端口8088,通过HTTP协议访问

Hadoop默认端口表及用途

| 端口 用途 |

| 9000 | fs.defaultFS,如:hdfs://172.25.40.171:9000 |

| 9001 | dfs.namenode.rpc-address,DataNode会连接这个端口 |

| 50070 | dfs.namenode.http-address |

| 50470 | dfs.namenode.https-address |

| 50100 | dfs.namenode.backup.address |

| 50105 | dfs.namenode.backup.http-address |

| 50090 | dfs.namenode.secondary.http-address,如:172.25.39.166:50090 |

| 50091 | dfs.namenode.secondary.https-address,如:172.25.39.166:50091 |

| 50020 | dfs.datanode.ipc.address |

| 50075 | dfs.datanode.http.address |

| 50475 | dfs.datanode.https.address |

| 50010 | dfs.datanode.address,DataNode的数据传输端口 |

| 8480 | dfs.journalnode.rpc-address |

| 8481 | dfs.journalnode.https-address |

| 8032 | yarn.resourcemanager.address |

| 8088 | yarn.resourcemanager.webapp.address,YARN的http端口 |

| 8090 | yarn.resourcemanager.webapp.https.address |

| 8030 | yarn.resourcemanager.scheduler.address |

| 8031 | yarn.resourcemanager.resource-tracker.address |

| 8033 | yarn.resourcemanager.admin.address |

| 8042 | yarn.nodemanager.webapp.address |

| 8040 | yarn.nodemanager.localizer.address |

| 8188 | yarn.timeline-service.webapp.address |

| 10020 | mapreduce.jobhistory.address |

| 19888 | mapreduce.jobhistory.webapp.address |

| 2888 | ZooKeeper,如果是Leader,用来监听Follower的连接 |

| 3888 | ZooKeeper,用于Leader选举 |

| 2181 | ZooKeeper,用来监听客户端的连接 |

| 60010 | hbase.master.info.port,HMaster的http端口 |

| 60000 | hbase.master.port,HMaster的RPC端口 |

| 60030 | hbase.regionserver.info.port,HRegionServer的http端口 |

| 60020 | hbase.regionserver.port,HRegionServer的RPC端口 |

| 8080 | hbase.rest.port,HBase REST server的端口 |

| 10000 | hive.server2.thrift.port |

| 9083 | hive.metastore.uris |智能推荐

从零开始搭建Hadoop_创建一个hadoop项目-程序员宅基地

文章浏览阅读331次。第一部分:准备工作1 安装虚拟机2 安装centos73 安装JDK以上三步是准备工作,至此已经完成一台已安装JDK的主机第二部分:准备3台虚拟机以下所有工作最好都在root权限下操作1 克隆上面已经有一台虚拟机了,现在对master进行克隆,克隆出另外2台子机;1.1 进行克隆21.2 下一步1.3 下一步1.4 下一步1.5 根据子机需要,命名和安装路径1.6 ..._创建一个hadoop项目

心脏滴血漏洞HeartBleed CVE-2014-0160深入代码层面的分析_heartbleed代码分析-程序员宅基地

文章浏览阅读1.7k次。心脏滴血漏洞HeartBleed CVE-2014-0160 是由heartbeat功能引入的,本文从深入码层面的分析该漏洞产生的原因_heartbleed代码分析

java读取ofd文档内容_ofd电子文档内容分析工具(分析文档、签章和证书)-程序员宅基地

文章浏览阅读1.4k次。前言ofd是国家文档标准,其对标的文档格式是pdf。ofd文档是容器格式文件,ofd其实就是压缩包。将ofd文件后缀改为.zip,解压后可看到文件包含的内容。ofd文件分析工具下载:点我下载。ofd文件解压后,可以看到如下内容: 对于xml文件,可以用文本工具查看。但是对于印章文件(Seal.esl)、签名文件(SignedValue.dat)就无法查看其内容了。本人开发一款ofd内容查看器,..._signedvalue.dat

基于FPGA的数据采集系统(一)_基于fpga的信息采集-程序员宅基地

文章浏览阅读1.8w次,点赞29次,收藏313次。整体系统设计本设计主要是对ADC和DAC的使用,主要实现功能流程为:首先通过串口向FPGA发送控制信号,控制DAC芯片tlv5618进行DA装换,转换的数据存在ROM中,转换开始时读取ROM中数据进行读取转换。其次用按键控制adc128s052进行模数转换100次,模数转换数据存储到FIFO中,再从FIFO中读取数据通过串口输出显示在pc上。其整体系统框图如下:图1:FPGA数据采集系统框图从图中可以看出,该系统主要包括9个模块:串口接收模块、按键消抖模块、按键控制模块、ROM模块、D.._基于fpga的信息采集

微服务 spring cloud zuul com.netflix.zuul.exception.ZuulException GENERAL-程序员宅基地

文章浏览阅读2.5w次。1.背景错误信息:-- [http-nio-9904-exec-5] o.s.c.n.z.filters.post.SendErrorFilter : Error during filteringcom.netflix.zuul.exception.ZuulException: Forwarding error at org.springframework.cloud..._com.netflix.zuul.exception.zuulexception

邻接矩阵-建立图-程序员宅基地

文章浏览阅读358次。1.介绍图的相关概念 图是由顶点的有穷非空集和一个描述顶点之间关系-边(或者弧)的集合组成。通常,图中的数据元素被称为顶点,顶点间的关系用边表示,图通常用字母G表示,图的顶点通常用字母V表示,所以图可以定义为: G=(V,E)其中,V(G)是图中顶点的有穷非空集合,E(G)是V(G)中顶点的边的有穷集合1.1 无向图:图中任意两个顶点构成的边是没有方向的1.2 有向图:图中..._给定一个邻接矩阵未必能够造出一个图

随便推点

MDT2012部署系列之11 WDS安装与配置-程序员宅基地

文章浏览阅读321次。(十二)、WDS服务器安装通过前面的测试我们会发现,每次安装的时候需要加域光盘映像,这是一个比较麻烦的事情,试想一个上万个的公司,你天天带着一个光盘与光驱去给别人装系统,这将是一个多么痛苦的事情啊,有什么方法可以解决这个问题了?答案是肯定的,下面我们就来简单说一下。WDS服务器,它是Windows自带的一个免费的基于系统本身角色的一个功能,它主要提供一种简单、安全的通过网络快速、远程将Window..._doc server2012上通过wds+mdt无人值守部署win11系统.doc

python--xlrd/xlwt/xlutils_xlutils模块可以读xlsx吗-程序员宅基地

文章浏览阅读219次。python–xlrd/xlwt/xlutilsxlrd只能读取,不能改,支持 xlsx和xls 格式xlwt只能改,不能读xlwt只能保存为.xls格式xlutils能将xlrd.Book转为xlwt.Workbook,从而得以在现有xls的基础上修改数据,并创建一个新的xls,实现修改xlrd打开文件import xlrdexcel=xlrd.open_workbook('E:/test.xlsx') 返回值为xlrd.book.Book对象,不能修改获取sheett_xlutils模块可以读xlsx吗

关于新版本selenium定位元素报错:‘WebDriver‘ object has no attribute ‘find_element_by_id‘等问题_unresolved attribute reference 'find_element_by_id-程序员宅基地

文章浏览阅读8.2w次,点赞267次,收藏656次。运行Selenium出现'WebDriver' object has no attribute 'find_element_by_id'或AttributeError: 'WebDriver' object has no attribute 'find_element_by_xpath'等定位元素代码错误,是因为selenium更新到了新的版本,以前的一些语法经过改动。..............._unresolved attribute reference 'find_element_by_id' for class 'webdriver

DOM对象转换成jQuery对象转换与子页面获取父页面DOM对象-程序员宅基地

文章浏览阅读198次。一:模态窗口//父页面JSwindow.showModalDialog(ifrmehref, window, 'dialogWidth:550px;dialogHeight:150px;help:no;resizable:no;status:no');//子页面获取父页面DOM对象//window.showModalDialog的DOM对象var v=parentWin..._jquery获取父window下的dom对象

什么是算法?-程序员宅基地

文章浏览阅读1.7w次,点赞15次,收藏129次。算法(algorithm)是解决一系列问题的清晰指令,也就是,能对一定规范的输入,在有限的时间内获得所要求的输出。 简单来说,算法就是解决一个问题的具体方法和步骤。算法是程序的灵 魂。二、算法的特征1.可行性 算法中执行的任何计算步骤都可以分解为基本可执行的操作步,即每个计算步都可以在有限时间里完成(也称之为有效性) 算法的每一步都要有确切的意义,不能有二义性。例如“增加x的值”,并没有说增加多少,计算机就无法执行明确的运算。 _算法

【网络安全】网络安全的标准和规范_网络安全标准规范-程序员宅基地

文章浏览阅读1.5k次,点赞18次,收藏26次。网络安全的标准和规范是网络安全领域的重要组成部分。它们为网络安全提供了技术依据,规定了网络安全的技术要求和操作方式,帮助我们构建安全的网络环境。下面,我们将详细介绍一些主要的网络安全标准和规范,以及它们在实际操作中的应用。_网络安全标准规范